One of the challenges when working in integration is troubleshooting. This becomes even more challenging with when you start using a new product.

Recently I worked with Oracle Product management (Thank you Darko and Lohit) to troubleshoot issues with an OAuth configuration of APIs in Oracle API Platform Cloud Service.

Now you can scroll down to error distribution and see the errors that occurred. In this case, because I selected "Last Week" you see a number of different errors that occurred last week and how often they occurred. When you run your test again, you will see one of the errors in the distribution increase, giving you insight in the type of error.

We tried different configurations, as you can see from the distribution, the graph tells us that the OAuth token was invalid and that in another case we had a bad JWT key. This mean we had to take a look at the configuration of the OAuth profile of the Oracle API Gateway Node. (see the documentation on how to configure Oracle Identity Cloud Service as OAuth provider).

Recently I worked with Oracle Product management (Thank you Darko and Lohit) to troubleshoot issues with an OAuth configuration of APIs in Oracle API Platform Cloud Service.

Setup

The setup was as follows:- An API Gateway node deployed to Oracle Compute Cloud Classic as an infrastructure provider

- Oracle Identity Management Cloud Service in the role of OAuth provider

We setup an API with several policies, including OAuth for security. When we called the service, it gave us a '401 unauthorized' error.

Oracle API Platform Cloud Service troubleshooting

The Oracle API Platform Service offers analytics for each API. You can navigate there by opening the API Platform Management portal, click on the API you want to troubleshoot and click on the Analytics tab (this is the bottom tab).

Click on Errors and Rejections, after setting the period you are interested in. Usually when you are troubleshooting, you would like to see the last hour.

Click on Errors and Rejections, after setting the period you are interested in. Usually when you are troubleshooting, you would like to see the last hour.

|

| Different type of analytics in an API |

Now you can scroll down to error distribution and see the errors that occurred. In this case, because I selected "Last Week" you see a number of different errors that occurred last week and how often they occurred. When you run your test again, you will see one of the errors in the distribution increase, giving you insight in the type of error.

|

| Distribution of each error type |

We tried different configurations, as you can see from the distribution, the graph tells us that the OAuth token was invalid and that in another case we had a bad JWT key. This mean we had to take a look at the configuration of the OAuth profile of the Oracle API Gateway Node. (see the documentation on how to configure Oracle Identity Cloud Service as OAuth provider).

OAuth token troubleshooting

We had a token, but it appeared to be invalid. It is hard to troubleshoot security: what is wrong with our configuration? Why are we getting the erors that we get? When you successfully obtain an OAuth token, you can inspect it with JSON Web Toolkit Debugger.

- Navigate to https://jwt.io

- Click on Debugger

- Paste the token in the window at the left hand side

|

| JWT debugger with default token example |

The debugger shows you a header, the payload and the signature.

Header

Algorithm that is used, for example SHA256 and types supported (JWT for example)

Payload

The claim is different per type. There are three types: public, private or registered. A registered claim contains fields like iss (issuer, in this case https://identity.oraclecloud.com/ ), sub (subject), aud (audience) etc. See for more information: https://tools.ietf.org/html/rfc7519#section-4.1

Signature

The signature of the token, to make sure nobody tampered with it.

Now you can compare that to what you have put in the configuration of Oracle Identity Management Cloud and the configuration of the Oracle API Gateway Node.

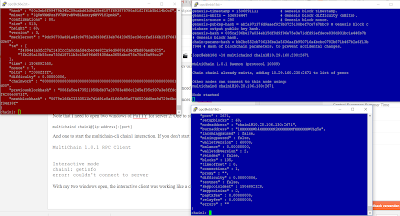

Oracle API Platform Gateway Node trouble shooting

Apart from looking at the token and the analytics it can help to look at the log files on the gateway node. The gateway node is an Oracle WebLogic Server with some applications installed on it.

There are several log files you can access.

There are several log files you can access.

- apics/logs. In this directory you find the apics.log file. It contains stacktraces and other information that help you troubleshoot the API.

- apics/customlogs. If you configured a custom policy in your API, the logfiles will be stored in this directory. You can log the content of objects that are passed in this API. See the documentation about using Groovy in your policies for information about the variables that you can use.

- 'Regular' Managed server logs. If something goes wrong with the connection to the Derby database, or other issues occur that have to do with the infrastructure, you can find the information in /servers/managedServer1/logs directory.

Summary

When troubleshooting APIs that you have configured in Oracle API Platform cloud service you can use the following tools:

- jwt.io Debugger. This tool lets you inspect OAuth tokens generated by a provider.

- Oracle API Platform Cloud Service Analytics. Shows the type of error.

- Oracle API Platform logging policies you put on the API. Lets you log the content of objects.

- Log files in the API Gateway node:

- {domain}/apics/logs for the logs of the gateway node. Contains stracktraces etc

- {domain}/apics/customlogs for any custom logs you entered in the api

- {domain}/servers/managedServer1/trace for default.log of the managed server

Happy coding!